Merging CSV and Excel data using an SQLite database

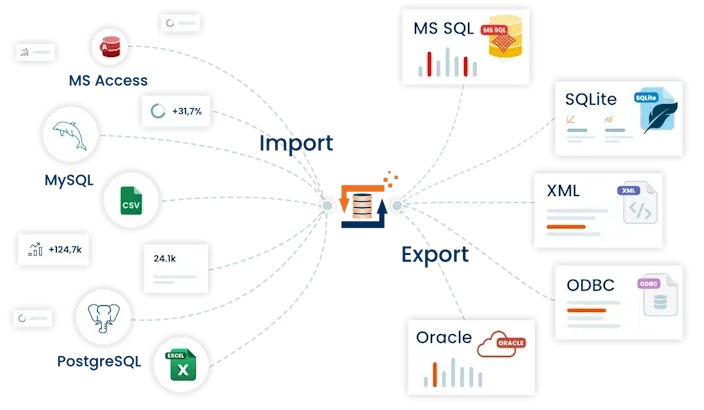

In this example we demonstrate how data from a CSV text file and an Excel list can be merged into a large export text file in flat file report format.

Note: As of version 4.1.4 the temporary SQLite database described is no longer needed! This example can completely and very easily be converted to rely on the InMemory Adapter tables. At the end of the article you will find a description (including a tutorial video, see further down the page) of how several data sources (CSV, Excel and more) can be merged and/or enriched by using the InMemory Adapter.

General information on Excel - CSV data merging

It is often the case that data derive from various (data) sources and need to be merged into a consolidated list (export file). For simplicity our example only merges two sources here. However, applying this method you can combine any number of sources and as much data as you wish.

For each source we require a separate FlowHeater Definition in order to first store the data temporarily in an SQLite database. Perhaps you are wondering why SQLite? Theoretically almost any database would suffice, but with SQLite we have the advantage that there is no need for supplementary database tools. FlowHeater is all we need to manipulate data with an SQLite database.

Import CSV text file into the SQLite database

We start by importing the CSV file into the SQLite database. First create a new FlowHeater Definition and choose the TextFile Adapter for the READ side and the SQLite Adapter for the WRITE side. Configure the READ side Adapter to select the CSV text file "text-data.csv" for input and close the Configurator for the CSV file.

Now position your mouse cursor in Designer over the SQLite Adapter on the WRITE side (do not open the Configurator yet) and select with a right mouse click "Adopt the READ fields" option.

Now open the Configurator for the SQLite Adapter on the WRITE side. For the database name enter "Addresses.sqlite" and enable the following "General" tab options:

- Create database if it does not exist

- Insert table if it does not exist

- Insert data

- Empty the table before importing

Once you have done this, switch to the "Fields / Data types" tab and unless it already exists type "t_Addresses" for the table name. It is necessary in this case, because when our SQLite database does not already exist we cannot select a table. Now we have to inform the SQLite Adapter which field of our CSV file should be used as a primary key. For this, click on the field list of the field ID, set the FlowHeater data type to integer and check the option "PrimaryKey" for this field. Close the Configurator popup of the SQLite Adapter with OK.

Now we must connect the fields of the CSV text file (READ side) with the fields of the SQLite database table (WRITE side). After this, we can import the CSV file into our SQLite database. Run this Definition once and store it with "Import-CSV-into-SQLite.fhd" as file name.

Import Excel worksheet into the SQLite database

We create another new FlowHeater Definition and for this select the Excel Adapter for the READ side and the SQlite Adapter for the WRITE side. Next we configure the Excel Adapter so that the Excel worksheet "Excel-Data.xls" can be read.

After this we open the Configurator for the SQLite Adapter on the WRITE side and select the same database "Addresses.sqlite" that we created in the previous steps. Enable in the "General" tab only the following options:

- Add fields that do not exist to the table

- Update data

And then switch to the "Fields / Data types" tab. The only thing to do here is to select the table "t_Addresses" from the SQLite tables list and click the "Load Schema" button once to load the fields already assigned to the table. Then close the SQLite Configurator popup with OK.

Now connect the ID field of the Excel file (READ side) with the ID field of the SQLite Adapter (WRITE side). At present we lack equivalent fields for the two additional fields of the Excel list in our SQLite database table. However, because we checked the "Add fields that do not exist to the table" option we can simply drag these fields to an empty area of the SQLite Adapter in the Designer. The SQLite Adapter automatically inserts these fields into the table and so amends the table schema for the new structure.

When you now run the Definition the SQLite table will be extended by two new fields and FlowHeater updates the table with data from the Excel file according to the ID field (PrimaryKey) into the SQLite database. Store this Definition with "Import-Excel-into-SQLite.fhd " as file name.

Export SQLite table as a flat file report

With our first two Definitions we have succeeded in consolidating the data from two sources (CSV and Excel) together. All that remains is to export the consolidated data as a flat file report. Create another new Definition and select for the READ side the SQLite Adapter and for the WRITE side the Textfile Adapter.

Open the SQLite Configurator and enter for the database name "Addresses.sqlite" to access the same database we created previously . Next switch to the "Fields / Data types" tab and select the "t_Addresses" table, initialize the fields list by clicking the "Load Schema" button and close the popup with OK.

Now open the TextFile Adapter Configurator on the WRITE side. Enter a file name for the output and amend the standard "Delimitation" option from "with delimiter" to "fixed width". Next switch to the "Fields / Data types" tab and click on the "Adopt the READ fields" button. Then close the Configurator popup with OK. Now we just have to connect the fields of the SQLite database (READ side) with the fields of the TextFile Adapter (WRITE side) and we can then start the export process. Store this Definition with "Export-SQLite-FlatFile.fhd" as file name.

Running automatically with the Batch Module

Using the Batch Module and the following CMD script you can automate the above 3 steps and potentially run these Definitions as a scheduled process via the Windows task planner.

@echo off

REM As necessary, amend for the installed path for FHBatch.exe

set FHBATCH="C:\Program Files\FlowHeater V2\BIN\FHBatch.exe"

REM Step 1: Export CSV to SQLite Datenbank

%FHBATCH% Import-CSV-into-SQLite.fhd

REM Step 2: Enrich the SQLite data with the Excel worksheet

%FHBATCH% Import-Excel-into-SQLite.fhd

REM Step 3: Export flat file report from the SQLite database

%FHBATCH% Export-SQLite-FlatFile.fhd

Implementation based on InMemory Adapter tables

In the above example it was written that a separate FlowHeater Definition must be setup to perform each of the individual steps. When implemented using InMemory Adapter tables and to use these for several processing steps, only one FlowHeater Definition is needed.

First processing step

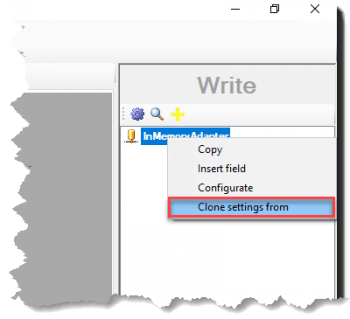

Data enrichment - adopt read fieldFirst we create a new Definition and select the TextFile Adapter for the READ side and the InMemory Adapter for the WRITE side, in order to read in the data that exists in CSV format and store it as a “temporary” InMemory Adapter table. With the Configurator of the TextFile Adapter we select the CSV file we want to load. We can now draw the fields on the READ side, either individually by using drag and drop with each to the WRITE side or more simply by a right mouse click on the InMemory Adapter on the WRITE side and in the context menu selecting “Clone settings from” to draw all the fields from the READ side to the WRITE side.

Data enrichment - adopt read fieldFirst we create a new Definition and select the TextFile Adapter for the READ side and the InMemory Adapter for the WRITE side, in order to read in the data that exists in CSV format and store it as a “temporary” InMemory Adapter table. With the Configurator of the TextFile Adapter we select the CSV file we want to load. We can now draw the fields on the READ side, either individually by using drag and drop with each to the WRITE side or more simply by a right mouse click on the InMemory Adapter on the WRITE side and in the context menu selecting “Clone settings from” to draw all the fields from the READ side to the WRITE side.

Note that we have not so far defined any settings in the InMemory Adapter. In fact, none are presently needed.

Now that the fields are connected from the READ to the WRITE side we can start a test run of the Definition and inspect the result. We see that a table with the present content of the CSV text file is displayed. Note that this data is “merely” saved in main memory!

There is not much more we can do at this stage, so let us continue.

Second processing step

Data enrichment - further processing stepNow we add to the same Definition a further processing step, with which we wish to read in another data source (Excel) to supplement the existing CSV data.

Data enrichment - further processing stepNow we add to the same Definition a further processing step, with which we wish to read in another data source (Excel) to supplement the existing CSV data.

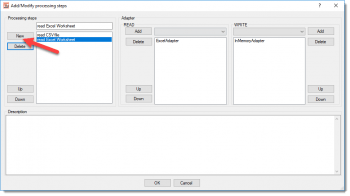

Using the menu option “Edit -> Processing Steps” add a new processing step and select for the READ side the Excel Adapter and for the WRITE side the InMemory Adapter.

We now configure the Excel Adapter so that the desired data is input from the right worksheet. Then we can draw these additional fields to the InMemory Adapter on the WRITE side, once more by drag and drop.

So the InMemory Adapter is able to tell which fields of any existing records to update, we need to configure some settings at this point. Note: With the default settings data would simply be inserted into the temporary table provided.

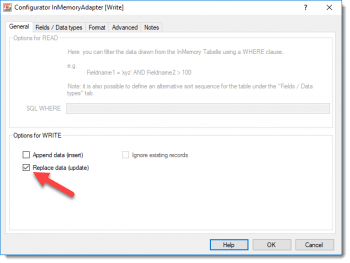

Data enrichment - updates onlyWe open the Configurator of the InMemory Adapter on the WRITE side and first configure that the InMemory Adapter shall only perform updates. To achieve this we check the option “Replace data (update)” under the General tab. To ensure records that may exist in the Excel worksheet but are missing in the CSV file do not get inserted, we ensure the option “Append data (insert)” remains unchecked. This way only those records that are already present in the temporary InMemory table are updated, everything else in the Excel worksheet is ignored.

Data enrichment - updates onlyWe open the Configurator of the InMemory Adapter on the WRITE side and first configure that the InMemory Adapter shall only perform updates. To achieve this we check the option “Replace data (update)” under the General tab. To ensure records that may exist in the Excel worksheet but are missing in the CSV file do not get inserted, we ensure the option “Append data (insert)” remains unchecked. This way only those records that are already present in the temporary InMemory table are updated, everything else in the Excel worksheet is ignored.

In addition, we must define one or more key fields to address the records we wish to update. For this we switch to the “Fields / Data types” tab where we mark the “ID” field and check the option “Use as key field for updates”. This way we inform the InMemory Adapter to detect incoming data that matches this field to determine whether a record exists and update it.

For this processing step make sure that the key field(s) on the READ side are connected with the same fields on the WRITE side. Only this way can the InMemory Adapter properly assign the Excel data to the already input CSV data.

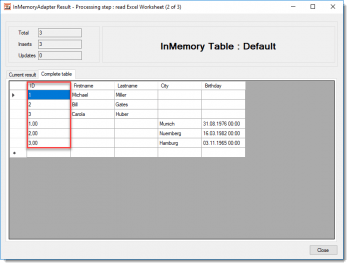

Data enrichment - trouble shootingNow we run the Definition and observe the results. At this stage two tables are opened, firstly the CSV data from the first processing step and secondly the Excel data from the second processing step. However, in this processing step no data gets updated and the record counters for Inserts and Updates are both zero. How is that possible?

Data enrichment - trouble shootingNow we run the Definition and observe the results. At this stage two tables are opened, firstly the CSV data from the first processing step and secondly the Excel data from the second processing step. However, in this processing step no data gets updated and the record counters for Inserts and Updates are both zero. How is that possible?

There is an easy way to find out. Configure the InMemory Adapter by also checking the option “Append data (insert)” and run the Definition again. Now we see that in the second processing step the InMemory Adapter has also added data to the table, but when we look at the format of the key field (ID) we realize why the update failed. The data from the CSV file are given as integers, whereas the data from the Excel worksheet have decimal places. We can quickly solve this problem by amending the FlowHeater data type for the key field (ID) in the InMemory Adapter to “Integer”. When we now rerun the Definition the result looks much better.

Third processing step

Now the contents of the temporary InMemory Adapter table are as we wanted. Now we need to save it somewhere or otherwise output it. It is of course also possible to use the InMemory Adapter on the READ side. We add a further processing step using the menu option “Edit -> Processing Steps” and select the InMemory Adapter on the READ side and the TextFile Adapter on the WRITE side, in order to write the merged and enriched data to a text file. All that remains to be done is connect the fields from the READ side to the WRITE side. Finally the Definition can be rerun and as a result it output an enriched text file that contains data from both the original CSV file and Excel worksheet.

Note: On the READ side the InMemory Adapter can also deliver a filtered result using a WHERE clause and/or in an alternatively sorted sequence according to the content of specified fields. If these options are not selected, the result will be ordered the same as the temporary table.

Example tutorial video

The tutorial video demonstrates the three processing steps described above: reading in the CSV file, updating it with data from Excel and finally saving the merged/enriched data as a text file. It also enlarges on the problem solving exercise with insert/update in the second processing step.

The englisch example tutorial video will be coming soon